Compare commits

No commits in common. "main" and "v0.3" have entirely different histories.

|

|

@ -1 +0,0 @@

|

|||

github: [meeb]

|

||||

|

|

@ -4,10 +4,12 @@ env:

|

|||

IMAGE_NAME: tubesync

|

||||

|

||||

on:

|

||||

workflow_dispatch:

|

||||

push:

|

||||

branches:

|

||||

- main

|

||||

pull_request:

|

||||

branches:

|

||||

- main

|

||||

|

||||

jobs:

|

||||

test:

|

||||

|

|

@ -25,7 +27,7 @@ jobs:

|

|||

run: |

|

||||

python -m pip install --upgrade pip

|

||||

pip install pipenv

|

||||

pipenv install --system --skip-lock

|

||||

pipenv install --system

|

||||

- name: Set up Django environment

|

||||

run: cp tubesync/tubesync/local_settings.py.example tubesync/tubesync/local_settings.py

|

||||

- name: Run Django tests

|

||||

|

|

@ -33,24 +35,13 @@ jobs:

|

|||

containerise:

|

||||

runs-on: ubuntu-latest

|

||||

steps:

|

||||

- name: Set up QEMU

|

||||

uses: docker/setup-qemu-action@v1

|

||||

- name: Set up Docker Buildx

|

||||

uses: docker/setup-buildx-action@v1

|

||||

- name: Log into GitHub Container Registry

|

||||

run: echo "${{ secrets.REGISTRY_ACCESS_TOKEN }}" | docker login https://ghcr.io -u ${{ github.actor }} --password-stdin

|

||||

- name: Lowercase github username for ghcr

|

||||

id: string

|

||||

uses: ASzc/change-string-case-action@v1

|

||||

with:

|

||||

string: ${{ github.actor }}

|

||||

- name: Build and push

|

||||

uses: docker/build-push-action@v2

|

||||

with:

|

||||

platforms: linux/amd64,linux/arm64

|

||||

push: true

|

||||

tags: ghcr.io/${{ steps.string.outputs.lowercase }}/${{ env.IMAGE_NAME }}:latest

|

||||

cache-from: type=registry,ref=ghcr.io/${{ steps.string.outputs.lowercase }}/${{ env.IMAGE_NAME }}:latest

|

||||

cache-to: type=inline

|

||||

build-args: |

|

||||

IMAGE_NAME=${{ env.IMAGE_NAME }}

|

||||

- uses: actions/checkout@v2

|

||||

- name: Build the container image

|

||||

run: docker build . --tag $IMAGE_NAME

|

||||

- name: Log into GitHub Container Registry

|

||||

run: echo "${{ secrets.REGISTRY_ACCESS_TOKEN }}" | docker login https://ghcr.io -u ${{ github.actor }} --password-stdin

|

||||

- name: Push image to GitHub Container Registry

|

||||

run: |

|

||||

LATEST_TAG=ghcr.io/meeb/$IMAGE_NAME:latest

|

||||

docker tag $IMAGE_NAME $LATEST_TAG

|

||||

docker push $LATEST_TAG

|

||||

|

|

|

|||

|

|

@ -11,28 +11,18 @@ jobs:

|

|||

containerise:

|

||||

runs-on: ubuntu-latest

|

||||

steps:

|

||||

- name: Set up QEMU

|

||||

uses: docker/setup-qemu-action@v1

|

||||

- name: Get tag

|

||||

id: tag

|

||||

uses: dawidd6/action-get-tag@v1

|

||||

- uses: docker/build-push-action@v2

|

||||

- name: Set up Docker Buildx

|

||||

uses: docker/setup-buildx-action@v1

|

||||

- name: Log into GitHub Container Registry

|

||||

run: echo "${{ secrets.REGISTRY_ACCESS_TOKEN }}" | docker login https://ghcr.io -u ${{ github.actor }} --password-stdin

|

||||

- name: Lowercase github username for ghcr

|

||||

id: string

|

||||

uses: ASzc/change-string-case-action@v1

|

||||

with:

|

||||

string: ${{ github.actor }}

|

||||

- name: Build and push

|

||||

uses: docker/build-push-action@v2

|

||||

with:

|

||||

platforms: linux/amd64,linux/arm64

|

||||

push: true

|

||||

tags: ghcr.io/${{ steps.string.outputs.lowercase }}/${{ env.IMAGE_NAME }}:${{ steps.tag.outputs.tag }}

|

||||

cache-from: type=registry,ref=ghcr.io/${{ steps.string.outputs.lowercase }}/${{ env.IMAGE_NAME }}:${{ steps.tag.outputs.tag }}

|

||||

cache-to: type=inline

|

||||

build-args: |

|

||||

IMAGE_NAME=${{ env.IMAGE_NAME }}

|

||||

- uses: actions/checkout@v2

|

||||

- name: Get tag

|

||||

id: vars

|

||||

run: echo ::set-output name=tag::${GITHUB_REF#refs/*/}

|

||||

- name: Build the container image

|

||||

run: docker build . --tag $IMAGE_NAME

|

||||

- name: Log into GitHub Container Registry

|

||||

run: echo "${{ secrets.REGISTRY_ACCESS_TOKEN }}" | docker login https://ghcr.io -u ${{ github.actor }} --password-stdin

|

||||

- name: Push image to GitHub Container Registry

|

||||

env:

|

||||

RELEASE_TAG: ${{ steps.vars.outputs.tag }}

|

||||

run: |

|

||||

REF_TAG=ghcr.io/meeb/$IMAGE_NAME:$RELEASE_TAG

|

||||

docker tag $IMAGE_NAME $REF_TAG

|

||||

docker push $REF_TAG

|

||||

|

|

|

|||

|

|

@ -1,4 +1,3 @@

|

|||

.DS_Store

|

||||

# Byte-compiled / optimized / DLL files

|

||||

__pycache__/

|

||||

*.py[cod]

|

||||

|

|

@ -131,6 +130,3 @@ dmypy.json

|

|||

|

||||

# Pyre type checker

|

||||

.pyre/

|

||||

|

||||

Pipfile.lock

|

||||

.vscode/launch.json

|

||||

|

|

|

|||

151

Dockerfile

|

|

@ -1,112 +1,71 @@

|

|||

FROM debian:bookworm-slim

|

||||

FROM debian:buster-slim

|

||||

|

||||

ARG TARGETPLATFORM

|

||||

ARG S6_VERSION="3.1.5.0"

|

||||

ARG FFMPEG_DATE="autobuild-2023-11-29-14-19"

|

||||

ARG FFMPEG_VERSION="112875-g47e214245b"

|

||||

ARG ARCH="amd64"

|

||||

ARG S6_VERSION="2.1.0.2"

|

||||

ARG FFMPEG_VERSION="4.3.1"

|

||||

|

||||

ENV DEBIAN_FRONTEND="noninteractive" \

|

||||

HOME="/root" \

|

||||

LANGUAGE="en_US.UTF-8" \

|

||||

LANG="en_US.UTF-8" \

|

||||

LC_ALL="en_US.UTF-8" \

|

||||

TERM="xterm" \

|

||||

S6_CMD_WAIT_FOR_SERVICES_MAXTIME="0"

|

||||

HOME="/root" \

|

||||

LANGUAGE="en_US.UTF-8" \

|

||||

LANG="en_US.UTF-8" \

|

||||

LC_ALL="en_US.UTF-8" \

|

||||

TERM="xterm" \

|

||||

S6_EXPECTED_SHA256="52460473413601ff7a84ae690b161a074217ddc734990c2cdee9847166cf669e" \

|

||||

S6_DOWNLOAD="https://github.com/just-containers/s6-overlay/releases/download/v${S6_VERSION}/s6-overlay-${ARCH}.tar.gz" \

|

||||

FFMPEG_EXPECTED_SHA256="47d95c0129fba27d051748a442a44a73ce1bd38d1e3f9fe1e9dd7258c7581fa5" \

|

||||

FFMPEG_DOWNLOAD="https://johnvansickle.com/ffmpeg/releases/ffmpeg-${FFMPEG_VERSION}-${ARCH}-static.tar.xz"

|

||||

|

||||

# Install third party software

|

||||

RUN export ARCH=$(case ${TARGETPLATFORM:-linux/amd64} in \

|

||||

"linux/amd64") echo "amd64" ;; \

|

||||

"linux/arm64") echo "aarch64" ;; \

|

||||

*) echo "" ;; esac) && \

|

||||

export S6_ARCH_EXPECTED_SHA256=$(case ${TARGETPLATFORM:-linux/amd64} in \

|

||||

"linux/amd64") echo "65d0d0f353d2ff9d0af202b268b4bf53a9948a5007650854855c729289085739" ;; \

|

||||

"linux/arm64") echo "3fbd14201473710a592b2189e81f00f3c8998e96d34f16bd2429c35d1bc36d00" ;; \

|

||||

*) echo "" ;; esac) && \

|

||||

export S6_DOWNLOAD_ARCH=$(case ${TARGETPLATFORM:-linux/amd64} in \

|

||||

"linux/amd64") echo "https://github.com/just-containers/s6-overlay/releases/download/v${S6_VERSION}/s6-overlay-x86_64.tar.xz" ;; \

|

||||

"linux/arm64") echo "https://github.com/just-containers/s6-overlay/releases/download/v${S6_VERSION}/s6-overlay-aarch64.tar.xz" ;; \

|

||||

*) echo "" ;; esac) && \

|

||||

export FFMPEG_EXPECTED_SHA256=$(case ${TARGETPLATFORM:-linux/amd64} in \

|

||||

"linux/amd64") echo "36bac8c527bf390603416f749ab0dd860142b0a66f0865b67366062a9c286c8b" ;; \

|

||||

"linux/arm64") echo "8f36e45d99d2367a5c0c220ee3164fa48f4f0cec35f78204ccced8dc303bfbdc" ;; \

|

||||

*) echo "" ;; esac) && \

|

||||

export FFMPEG_DOWNLOAD=$(case ${TARGETPLATFORM:-linux/amd64} in \

|

||||

"linux/amd64") echo "https://github.com/yt-dlp/FFmpeg-Builds/releases/download/${FFMPEG_DATE}/ffmpeg-N-${FFMPEG_VERSION}-linux64-gpl.tar.xz" ;; \

|

||||

"linux/arm64") echo "https://github.com/yt-dlp/FFmpeg-Builds/releases/download/${FFMPEG_DATE}/ffmpeg-N-${FFMPEG_VERSION}-linuxarm64-gpl.tar.xz" ;; \

|

||||

*) echo "" ;; esac) && \

|

||||

export S6_NOARCH_EXPECTED_SHA256="fd80c231e8ae1a0667b7ae2078b9ad0e1269c4d117bf447a4506815a700dbff3" && \

|

||||

export S6_DOWNLOAD_NOARCH="https://github.com/just-containers/s6-overlay/releases/download/v${S6_VERSION}/s6-overlay-noarch.tar.xz" && \

|

||||

echo "Building for arch: ${ARCH}|${ARCH44}, downloading S6 from: ${S6_DOWNLOAD}}, expecting S6 SHA256: ${S6_EXPECTED_SHA256}" && \

|

||||

set -x && \

|

||||

apt-get update && \

|

||||

apt-get -y --no-install-recommends install locales && \

|

||||

echo "en_US.UTF-8 UTF-8" > /etc/locale.gen && \

|

||||

locale-gen en_US.UTF-8 && \

|

||||

# Install required distro packages

|

||||

apt-get -y --no-install-recommends install curl ca-certificates binutils xz-utils && \

|

||||

# Install s6

|

||||

curl -L ${S6_DOWNLOAD_NOARCH} --output /tmp/s6-overlay-noarch.tar.xz && \

|

||||

echo "${S6_NOARCH_EXPECTED_SHA256} /tmp/s6-overlay-noarch.tar.xz" | sha256sum -c - && \

|

||||

tar -C / -Jxpf /tmp/s6-overlay-noarch.tar.xz && \

|

||||

curl -L ${S6_DOWNLOAD_ARCH} --output /tmp/s6-overlay-${ARCH}.tar.xz && \

|

||||

echo "${S6_ARCH_EXPECTED_SHA256} /tmp/s6-overlay-${ARCH}.tar.xz" | sha256sum -c - && \

|

||||

tar -C / -Jxpf /tmp/s6-overlay-${ARCH}.tar.xz && \

|

||||

# Install ffmpeg

|

||||

echo "Building for arch: ${ARCH}|${ARCH44}, downloading FFMPEG from: ${FFMPEG_DOWNLOAD}, expecting FFMPEG SHA256: ${FFMPEG_EXPECTED_SHA256}" && \

|

||||

curl -L ${FFMPEG_DOWNLOAD} --output /tmp/ffmpeg-${ARCH}.tar.xz && \

|

||||

sha256sum /tmp/ffmpeg-${ARCH}.tar.xz && \

|

||||

echo "${FFMPEG_EXPECTED_SHA256} /tmp/ffmpeg-${ARCH}.tar.xz" | sha256sum -c - && \

|

||||

tar -xf /tmp/ffmpeg-${ARCH}.tar.xz --strip-components=2 --no-anchored -C /usr/local/bin/ "ffmpeg" && \

|

||||

tar -xf /tmp/ffmpeg-${ARCH}.tar.xz --strip-components=2 --no-anchored -C /usr/local/bin/ "ffprobe" && \

|

||||

# Clean up

|

||||

rm -rf /tmp/s6-overlay-${ARCH}.tar.gz && \

|

||||

rm -rf /tmp/ffmpeg-${ARCH}.tar.xz && \

|

||||

apt-get -y autoremove --purge curl binutils xz-utils

|

||||

RUN set -x && \

|

||||

apt-get update && \

|

||||

apt-get -y --no-install-recommends install locales && \

|

||||

echo "en_US.UTF-8 UTF-8" > /etc/locale.gen && \

|

||||

locale-gen en_US.UTF-8 && \

|

||||

# Install required distro packages

|

||||

apt-get -y --no-install-recommends install curl xz-utils ca-certificates binutils && \

|

||||

# Install s6

|

||||

curl -L ${S6_DOWNLOAD} --output /tmp/s6-overlay-${ARCH}.tar.gz && \

|

||||

sha256sum /tmp/s6-overlay-${ARCH}.tar.gz && \

|

||||

echo "${S6_EXPECTED_SHA256} /tmp/s6-overlay-${ARCH}.tar.gz" | sha256sum -c - && \

|

||||

tar xzf /tmp/s6-overlay-${ARCH}.tar.gz -C / && \

|

||||

# Install ffmpeg

|

||||

curl -L ${FFMPEG_DOWNLOAD} --output /tmp/ffmpeg-${ARCH}-static.tar.xz && \

|

||||

echo "${FFMPEG_EXPECTED_SHA256} /tmp/ffmpeg-${ARCH}-static.tar.xz" | sha256sum -c - && \

|

||||

xz --decompress /tmp/ffmpeg-${ARCH}-static.tar.xz && \

|

||||

tar -xvf /tmp/ffmpeg-${ARCH}-static.tar -C /tmp && \

|

||||

install -v -s -g root -o root -m 0755 -s /tmp/ffmpeg-${FFMPEG_VERSION}-${ARCH}-static/ffmpeg -t /usr/local/bin && \

|

||||

# Clean up

|

||||

rm -rf /tmp/s6-overlay-${ARCH}.tar.gz && \

|

||||

rm -rf /tmp/ffmpeg-${ARCH}-static.tar && \

|

||||

rm -rf /tmp/ffmpeg-${FFMPEG_VERSION}-${ARCH}-static && \

|

||||

apt-get -y autoremove --purge curl xz-utils binutils

|

||||

|

||||

# Copy app

|

||||

COPY tubesync /app

|

||||

COPY tubesync/tubesync/local_settings.py.container /app/tubesync/local_settings.py

|

||||

|

||||

# Copy over pip.conf to use piwheels

|

||||

COPY pip.conf /etc/pip.conf

|

||||

# Append container bundled software versions

|

||||

RUN echo "ffmpeg_version = '${FFMPEG_VERSION}-static'" >> /app/common/third_party_versions.py

|

||||

|

||||

# Add Pipfile

|

||||

COPY Pipfile /app/Pipfile

|

||||

COPY Pipfile.lock /app/Pipfile.lock

|

||||

|

||||

# Switch workdir to the the app

|

||||

WORKDIR /app

|

||||

|

||||

# Set up the app

|

||||

RUN set -x && \

|

||||

apt-get update && \

|

||||

# Install required distro packages

|

||||

apt-get -y install nginx-light && \

|

||||

apt-get -y --no-install-recommends install \

|

||||

python3 \

|

||||

python3-dev \

|

||||

python3-pip \

|

||||

python3-wheel \

|

||||

pipenv \

|

||||

gcc \

|

||||

g++ \

|

||||

make \

|

||||

pkgconf \

|

||||

default-libmysqlclient-dev \

|

||||

libmariadb3 \

|

||||

postgresql-common \

|

||||

libpq-dev \

|

||||

libpq5 \

|

||||

libjpeg62-turbo \

|

||||

libwebp7 \

|

||||

libjpeg-dev \

|

||||

zlib1g-dev \

|

||||

libwebp-dev \

|

||||

redis-server && \

|

||||

apt-get -y --no-install-recommends install python3 python3-setuptools python3-pip python3-dev gcc make && \

|

||||

# Install pipenv

|

||||

pip3 --disable-pip-version-check install pipenv && \

|

||||

# Create a 'app' user which the application will run as

|

||||

groupadd app && \

|

||||

useradd -M -d /app -s /bin/false -g app app && \

|

||||

# Install non-distro packages

|

||||

PIPENV_VERBOSITY=64 pipenv install --system --skip-lock && \

|

||||

pipenv install --system && \

|

||||

# Make absolutely sure we didn't accidentally bundle a SQLite dev database

|

||||

rm -rf /app/db.sqlite3 && \

|

||||

# Run any required app commands

|

||||

|

|

@ -119,19 +78,10 @@ RUN set -x && \

|

|||

mkdir -p /downloads/video && \

|

||||

# Clean up

|

||||

rm /app/Pipfile && \

|

||||

rm /app/Pipfile.lock && \

|

||||

pipenv --clear && \

|

||||

apt-get -y autoremove --purge \

|

||||

python3-pip \

|

||||

python3-dev \

|

||||

gcc \

|

||||

g++ \

|

||||

make \

|

||||

default-libmysqlclient-dev \

|

||||

postgresql-common \

|

||||

libpq-dev \

|

||||

libjpeg-dev \

|

||||

zlib1g-dev \

|

||||

libwebp-dev && \

|

||||

pip3 --disable-pip-version-check uninstall -y pipenv wheel virtualenv && \

|

||||

apt-get -y autoremove --purge python3-pip python3-dev gcc make && \

|

||||

apt-get -y autoremove && \

|

||||

apt-get -y autoclean && \

|

||||

rm -rf /var/lib/apt/lists/* && \

|

||||

|

|

@ -141,12 +91,7 @@ RUN set -x && \

|

|||

rm -rf /root && \

|

||||

mkdir -p /root && \

|

||||

chown root:root /root && \

|

||||

chmod 0755 /root

|

||||

|

||||

# Append software versions

|

||||

RUN set -x && \

|

||||

FFMPEG_VERSION=$(/usr/local/bin/ffmpeg -version | head -n 1 | awk '{ print $3 }') && \

|

||||

echo "ffmpeg_version = '${FFMPEG_VERSION}'" >> /app/common/third_party_versions.py

|

||||

chmod 0700 /root

|

||||

|

||||

# Copy root

|

||||

COPY config/root /

|

||||

|

|

@ -156,7 +101,7 @@ HEALTHCHECK --interval=1m --timeout=10s CMD /app/healthcheck.py http://127.0.0.1

|

|||

|

||||

# ENVS and ports

|

||||

ENV PYTHONPATH "/app:${PYTHONPATH}"

|

||||

EXPOSE 4848

|

||||

EXPOSE 8080

|

||||

|

||||

# Volumes

|

||||

VOLUME ["/config", "/downloads"]

|

||||

|

|

|

|||

22

Makefile

|

|

@ -8,17 +8,17 @@ all: clean build

|

|||

|

||||

|

||||

dev:

|

||||

$(python) tubesync/manage.py runserver

|

||||

$(python) app/manage.py runserver

|

||||

|

||||

|

||||

build:

|

||||

mkdir -p tubesync/media

|

||||

mkdir -p tubesync/static

|

||||

$(python) tubesync/manage.py collectstatic --noinput

|

||||

mkdir -p app/media

|

||||

mkdir -p app/static

|

||||

$(python) app/manage.py collectstatic --noinput

|

||||

|

||||

|

||||

clean:

|

||||

rm -rf tubesync/static

|

||||

rm -rf app/static

|

||||

|

||||

|

||||

container: clean

|

||||

|

|

@ -29,13 +29,5 @@ runcontainer:

|

|||

$(docker) run --rm --name $(name) --env-file dev.env --log-opt max-size=50m -ti -p 4848:4848 $(image)

|

||||

|

||||

|

||||

stopcontainer:

|

||||

$(docker) stop $(name)

|

||||

|

||||

|

||||

test: build

|

||||

cd tubesync && $(python) manage.py test --verbosity=2 && cd ..

|

||||

|

||||

|

||||

shell:

|

||||

cd tubesync && $(python) manage.py shell

|

||||

test:

|

||||

$(python) app/manage.py test --verbosity=2

|

||||

|

|

|

|||

15

Pipfile

|

|

@ -4,10 +4,9 @@ url = "https://pypi.org/simple"

|

|||

verify_ssl = true

|

||||

|

||||

[dev-packages]

|

||||

autopep8 = "*"

|

||||

|

||||

[packages]

|

||||

django = "~=3.2"

|

||||

django = "*"

|

||||

django-sass-processor = "*"

|

||||

libsass = "*"

|

||||

pillow = "*"

|

||||

|

|

@ -15,11 +14,9 @@ whitenoise = "*"

|

|||

gunicorn = "*"

|

||||

django-compressor = "*"

|

||||

httptools = "*"

|

||||

youtube-dl = "*"

|

||||

django-background-tasks = "*"

|

||||

django-basicauth = "*"

|

||||

psycopg2-binary = "*"

|

||||

mysqlclient = "*"

|

||||

yt-dlp = "*"

|

||||

redis = "*"

|

||||

hiredis = "*"

|

||||

requests = {extras = ["socks"], version = "*"}

|

||||

requests = "*"

|

||||

|

||||

[requires]

|

||||

python_version = "3"

|

||||

|

|

|

|||

|

|

@ -0,0 +1,247 @@

|

|||

{

|

||||

"_meta": {

|

||||

"hash": {

|

||||

"sha256": "a4bb556fc61ee4583f9588980450b071814298ee4d1a1023fad149c14d14aaba"

|

||||

},

|

||||

"pipfile-spec": 6,

|

||||

"requires": {

|

||||

"python_version": "3"

|

||||

},

|

||||

"sources": [

|

||||

{

|

||||

"name": "pypi",

|

||||

"url": "https://pypi.org/simple",

|

||||

"verify_ssl": true

|

||||

}

|

||||

]

|

||||

},

|

||||

"default": {

|

||||

"asgiref": {

|

||||

"hashes": [

|

||||

"sha256:5ee950735509d04eb673bd7f7120f8fa1c9e2df495394992c73234d526907e17",

|

||||

"sha256:7162a3cb30ab0609f1a4c95938fd73e8604f63bdba516a7f7d64b83ff09478f0"

|

||||

],

|

||||

"version": "==3.3.1"

|

||||

},

|

||||

"certifi": {

|

||||

"hashes": [

|

||||

"sha256:1a4995114262bffbc2413b159f2a1a480c969de6e6eb13ee966d470af86af59c",

|

||||

"sha256:719a74fb9e33b9bd44cc7f3a8d94bc35e4049deebe19ba7d8e108280cfd59830"

|

||||

],

|

||||

"version": "==2020.12.5"

|

||||

},

|

||||

"chardet": {

|

||||

"hashes": [

|

||||

"sha256:0d6f53a15db4120f2b08c94f11e7d93d2c911ee118b6b30a04ec3ee8310179fa",

|

||||

"sha256:f864054d66fd9118f2e67044ac8981a54775ec5b67aed0441892edb553d21da5"

|

||||

],

|

||||

"version": "==4.0.0"

|

||||

},

|

||||

"django": {

|

||||

"hashes": [

|

||||

"sha256:5c866205f15e7a7123f1eec6ab939d22d5bde1416635cab259684af66d8e48a2",

|

||||

"sha256:edb10b5c45e7e9c0fb1dc00b76ec7449aca258a39ffd613dbd078c51d19c9f03"

|

||||

],

|

||||

"index": "pypi",

|

||||

"version": "==3.1.4"

|

||||

},

|

||||

"django-appconf": {

|

||||

"hashes": [

|

||||

"sha256:1b1d0e1069c843ebe8ae5aa48ec52403b1440402b320c3e3a206a0907e97bb06",

|

||||

"sha256:be58deb54a43d77d2e1621fe59f787681376d3cd0b8bd8e4758ef6c3a6453380"

|

||||

],

|

||||

"version": "==1.0.4"

|

||||

},

|

||||

"django-background-tasks": {

|

||||

"hashes": [

|

||||

"sha256:e1b19e8d495a276c9d64c5a1ff8b41132f75d2f58e45be71b78650dad59af9de"

|

||||

],

|

||||

"index": "pypi",

|

||||

"version": "==1.2.5"

|

||||

},

|

||||

"django-compat": {

|

||||

"hashes": [

|

||||

"sha256:3ac9a3bedc56b9365d9eb241bc5157d0c193769bf995f9a78dc1bc24e7c2331b"

|

||||

],

|

||||

"version": "==1.0.15"

|

||||

},

|

||||

"django-compressor": {

|

||||

"hashes": [

|

||||

"sha256:57ac0a696d061e5fc6fbc55381d2050f353b973fb97eee5593f39247bc0f30af",

|

||||

"sha256:d2ed1c6137ddaac5536233ec0a819e14009553fee0a869bea65d03e5285ba74f"

|

||||

],

|

||||

"index": "pypi",

|

||||

"version": "==2.4"

|

||||

},

|

||||

"django-sass-processor": {

|

||||

"hashes": [

|

||||

"sha256:9b46a12ca8bdcb397d46fbcc49e6a926ff9f76a93c5efeb23b495419fd01fc7a"

|

||||

],

|

||||

"index": "pypi",

|

||||

"version": "==0.8.2"

|

||||

},

|

||||

"gunicorn": {

|

||||

"hashes": [

|

||||

"sha256:1904bb2b8a43658807108d59c3f3d56c2b6121a701161de0ddf9ad140073c626",

|

||||

"sha256:cd4a810dd51bf497552cf3f863b575dabd73d6ad6a91075b65936b151cbf4f9c"

|

||||

],

|

||||

"index": "pypi",

|

||||

"version": "==20.0.4"

|

||||

},

|

||||

"httptools": {

|

||||

"hashes": [

|

||||

"sha256:0a4b1b2012b28e68306575ad14ad5e9120b34fccd02a81eb08838d7e3bbb48be",

|

||||

"sha256:3592e854424ec94bd17dc3e0c96a64e459ec4147e6d53c0a42d0ebcef9cb9c5d",

|

||||

"sha256:41b573cf33f64a8f8f3400d0a7faf48e1888582b6f6e02b82b9bd4f0bf7497ce",

|

||||

"sha256:56b6393c6ac7abe632f2294da53f30d279130a92e8ae39d8d14ee2e1b05ad1f2",

|

||||

"sha256:86c6acd66765a934e8730bf0e9dfaac6fdcf2a4334212bd4a0a1c78f16475ca6",

|

||||

"sha256:96da81e1992be8ac2fd5597bf0283d832287e20cb3cfde8996d2b00356d4e17f",

|

||||

"sha256:96eb359252aeed57ea5c7b3d79839aaa0382c9d3149f7d24dd7172b1bcecb009",

|

||||

"sha256:a2719e1d7a84bb131c4f1e0cb79705034b48de6ae486eb5297a139d6a3296dce",

|

||||

"sha256:ac0aa11e99454b6a66989aa2d44bca41d4e0f968e395a0a8f164b401fefe359a",

|

||||

"sha256:bc3114b9edbca5a1eb7ae7db698c669eb53eb8afbbebdde116c174925260849c",

|

||||

"sha256:fa3cd71e31436911a44620473e873a256851e1f53dee56669dae403ba41756a4",

|

||||

"sha256:fea04e126014169384dee76a153d4573d90d0cbd1d12185da089f73c78390437"

|

||||

],

|

||||

"index": "pypi",

|

||||

"version": "==0.1.1"

|

||||

},

|

||||

"idna": {

|

||||

"hashes": [

|

||||

"sha256:b307872f855b18632ce0c21c5e45be78c0ea7ae4c15c828c20788b26921eb3f6",

|

||||

"sha256:b97d804b1e9b523befed77c48dacec60e6dcb0b5391d57af6a65a312a90648c0"

|

||||

],

|

||||

"version": "==2.10"

|

||||

},

|

||||

"libsass": {

|

||||

"hashes": [

|

||||

"sha256:1521d2a8d4b397c6ec90640a1f6b5529077035efc48ef1c2e53095544e713d1b",

|

||||

"sha256:1b2d415bbf6fa7da33ef46e549db1418498267b459978eff8357e5e823962d35",

|

||||

"sha256:25ebc2085f5eee574761ccc8d9cd29a9b436fc970546d5ef08c6fa41eb57dff1",

|

||||

"sha256:2ae806427b28bc1bb7cb0258666d854fcf92ba52a04656b0b17ba5e190fb48a9",

|

||||

"sha256:4a246e4b88fd279abef8b669206228c92534d96ddcd0770d7012088c408dff23",

|

||||

"sha256:553e5096414a8d4fb48d0a48f5a038d3411abe254d79deac5e008516c019e63a",

|

||||

"sha256:697f0f9fa8a1367ca9ec6869437cb235b1c537fc8519983d1d890178614a8903",

|

||||

"sha256:a8fd4af9f853e8bf42b1425c5e48dd90b504fa2e70d7dac5ac80b8c0a5a5fe85",

|

||||

"sha256:c9411fec76f480ffbacc97d8188322e02a5abca6fc78e70b86a2a2b421eae8a2",

|

||||

"sha256:daa98a51086d92aa7e9c8871cf1a8258124b90e2abf4697852a3dca619838618",

|

||||

"sha256:e0e60836eccbf2d9e24ec978a805cd6642fa92515fbd95e3493fee276af76f8a",

|

||||

"sha256:e64ae2587f1a683e831409aad03ba547c245ef997e1329fffadf7a866d2510b8",

|

||||

"sha256:f6852828e9e104d2ce0358b73c550d26dd86cc3a69439438c3b618811b9584f5"

|

||||

],

|

||||

"index": "pypi",

|

||||

"version": "==0.20.1"

|

||||

},

|

||||

"pillow": {

|

||||

"hashes": [

|

||||

"sha256:006de60d7580d81f4a1a7e9f0173dc90a932e3905cc4d47ea909bc946302311a",

|

||||

"sha256:0a2e8d03787ec7ad71dc18aec9367c946ef8ef50e1e78c71f743bc3a770f9fae",

|

||||

"sha256:0eeeae397e5a79dc088d8297a4c2c6f901f8fb30db47795113a4a605d0f1e5ce",

|

||||

"sha256:11c5c6e9b02c9dac08af04f093eb5a2f84857df70a7d4a6a6ad461aca803fb9e",

|

||||

"sha256:2fb113757a369a6cdb189f8df3226e995acfed0a8919a72416626af1a0a71140",

|

||||

"sha256:4b0ef2470c4979e345e4e0cc1bbac65fda11d0d7b789dbac035e4c6ce3f98adb",

|

||||

"sha256:59e903ca800c8cfd1ebe482349ec7c35687b95e98cefae213e271c8c7fffa021",

|

||||

"sha256:5abd653a23c35d980b332bc0431d39663b1709d64142e3652890df4c9b6970f6",

|

||||

"sha256:5f9403af9c790cc18411ea398a6950ee2def2a830ad0cfe6dc9122e6d528b302",

|

||||

"sha256:6b4a8fd632b4ebee28282a9fef4c341835a1aa8671e2770b6f89adc8e8c2703c",

|

||||

"sha256:6c1aca8231625115104a06e4389fcd9ec88f0c9befbabd80dc206c35561be271",

|

||||

"sha256:795e91a60f291e75de2e20e6bdd67770f793c8605b553cb6e4387ce0cb302e09",

|

||||

"sha256:7ba0ba61252ab23052e642abdb17fd08fdcfdbbf3b74c969a30c58ac1ade7cd3",

|

||||

"sha256:7c9401e68730d6c4245b8e361d3d13e1035cbc94db86b49dc7da8bec235d0015",

|

||||

"sha256:81f812d8f5e8a09b246515fac141e9d10113229bc33ea073fec11403b016bcf3",

|

||||

"sha256:895d54c0ddc78a478c80f9c438579ac15f3e27bf442c2a9aa74d41d0e4d12544",

|

||||

"sha256:8de332053707c80963b589b22f8e0229f1be1f3ca862a932c1bcd48dafb18dd8",

|

||||

"sha256:92c882b70a40c79de9f5294dc99390671e07fc0b0113d472cbea3fde15db1792",

|

||||

"sha256:95edb1ed513e68bddc2aee3de66ceaf743590bf16c023fb9977adc4be15bd3f0",

|

||||

"sha256:b63d4ff734263ae4ce6593798bcfee6dbfb00523c82753a3a03cbc05555a9cc3",

|

||||

"sha256:bd7bf289e05470b1bc74889d1466d9ad4a56d201f24397557b6f65c24a6844b8",

|

||||

"sha256:cc3ea6b23954da84dbee8025c616040d9aa5eaf34ea6895a0a762ee9d3e12e11",

|

||||

"sha256:cc9ec588c6ef3a1325fa032ec14d97b7309db493782ea8c304666fb10c3bd9a7",

|

||||

"sha256:d3d07c86d4efa1facdf32aa878bd508c0dc4f87c48125cc16b937baa4e5b5e11",

|

||||

"sha256:d8a96747df78cda35980905bf26e72960cba6d355ace4780d4bdde3b217cdf1e",

|

||||

"sha256:e38d58d9138ef972fceb7aeec4be02e3f01d383723965bfcef14d174c8ccd039",

|

||||

"sha256:eb472586374dc66b31e36e14720747595c2b265ae962987261f044e5cce644b5",

|

||||

"sha256:fbd922f702582cb0d71ef94442bfca57624352622d75e3be7a1e7e9360b07e72"

|

||||

],

|

||||

"index": "pypi",

|

||||

"version": "==8.0.1"

|

||||

},

|

||||

"pytz": {

|

||||

"hashes": [

|

||||

"sha256:3e6b7dd2d1e0a59084bcee14a17af60c5c562cdc16d828e8eba2e683d3a7e268",

|

||||

"sha256:5c55e189b682d420be27c6995ba6edce0c0a77dd67bfbe2ae6607134d5851ffd"

|

||||

],

|

||||

"version": "==2020.4"

|

||||

},

|

||||

"rcssmin": {

|

||||

"hashes": [

|

||||

"sha256:ca87b695d3d7864157773a61263e5abb96006e9ff0e021eff90cbe0e1ba18270"

|

||||

],

|

||||

"version": "==1.0.6"

|

||||

},

|

||||

"requests": {

|

||||

"hashes": [

|

||||

"sha256:27973dd4a904a4f13b263a19c866c13b92a39ed1c964655f025f3f8d3d75b804",

|

||||

"sha256:c210084e36a42ae6b9219e00e48287def368a26d03a048ddad7bfee44f75871e"

|

||||

],

|

||||

"index": "pypi",

|

||||

"version": "==2.25.1"

|

||||

},

|

||||

"rjsmin": {

|

||||

"hashes": [

|

||||

"sha256:0ab825839125eaca57cc59581d72e596e58a7a56fbc0839996b7528f0343a0a8",

|

||||

"sha256:211c2fe8298951663bbc02acdffbf714f6793df54bfc50e1c6c9e71b3f2559a3",

|

||||

"sha256:466fe70cc5647c7c51b3260c7e2e323a98b2b173564247f9c89e977720a0645f",

|

||||

"sha256:585e75a84d9199b68056fd4a083d9a61e2a92dfd10ff6d4ce5bdb04bc3bdbfaf",

|

||||

"sha256:6044ca86e917cd5bb2f95e6679a4192cef812122f28ee08c677513de019629b3",

|

||||

"sha256:714329db774a90947e0e2086cdddb80d5e8c4ac1c70c9f92436378dedb8ae345",

|

||||

"sha256:799890bd07a048892d8d3deb9042dbc20b7f5d0eb7da91e9483c561033b23ce2",

|

||||

"sha256:975b69754d6a76be47c0bead12367a1ca9220d08e5393f80bab0230d4625d1f4",

|

||||

"sha256:b15dc75c71f65d9493a8c7fa233fdcec823e3f1b88ad84a843ffef49b338ac32",

|

||||

"sha256:dd0f4819df4243ffe4c964995794c79ca43943b5b756de84be92b445a652fb86",

|

||||

"sha256:e3908b21ebb584ce74a6ac233bdb5f29485752c9d3be5e50c5484ed74169232c",

|

||||

"sha256:e487a7783ac4339e79ec610b98228eb9ac72178973e3dee16eba0e3feef25924",

|

||||

"sha256:ecd29f1b3e66a4c0753105baec262b331bcbceefc22fbe6f7e8bcd2067bcb4d7"

|

||||

],

|

||||

"version": "==1.1.0"

|

||||

},

|

||||

"six": {

|

||||

"hashes": [

|

||||

"sha256:30639c035cdb23534cd4aa2dd52c3bf48f06e5f4a941509c8bafd8ce11080259",

|

||||

"sha256:8b74bedcbbbaca38ff6d7491d76f2b06b3592611af620f8426e82dddb04a5ced"

|

||||

],

|

||||

"version": "==1.15.0"

|

||||

},

|

||||

"sqlparse": {

|

||||

"hashes": [

|

||||

"sha256:017cde379adbd6a1f15a61873f43e8274179378e95ef3fede90b5aa64d304ed0",

|

||||

"sha256:0f91fd2e829c44362cbcfab3e9ae12e22badaa8a29ad5ff599f9ec109f0454e8"

|

||||

],

|

||||

"version": "==0.4.1"

|

||||

},

|

||||

"urllib3": {

|

||||

"hashes": [

|

||||

"sha256:19188f96923873c92ccb987120ec4acaa12f0461fa9ce5d3d0772bc965a39e08",

|

||||

"sha256:d8ff90d979214d7b4f8ce956e80f4028fc6860e4431f731ea4a8c08f23f99473"

|

||||

],

|

||||

"version": "==1.26.2"

|

||||

},

|

||||

"whitenoise": {

|

||||

"hashes": [

|

||||

"sha256:05ce0be39ad85740a78750c86a93485c40f08ad8c62a6006de0233765996e5c7",

|

||||

"sha256:05d00198c777028d72d8b0bbd234db605ef6d60e9410125124002518a48e515d"

|

||||

],

|

||||

"index": "pypi",

|

||||

"version": "==5.2.0"

|

||||

},

|

||||

"youtube-dl": {

|

||||

"hashes": [

|

||||

"sha256:65968065e66966955dc79fad9251565fcc982566118756da624bd21467f3a04c",

|

||||

"sha256:eaa859f15b6897bec21474b7787dc958118c8088e1f24d4ef1d58eab13188958"

|

||||

],

|

||||

"index": "pypi",

|

||||

"version": "==2020.12.14"

|

||||

}

|

||||

},

|

||||

"develop": {}

|

||||

}

|

||||

168

README.md

|

|

@ -1,6 +1,6 @@

|

|||

# TubeSync

|

||||

|

||||

**This is a preview release of TubeSync, it may contain bugs but should be usable**

|

||||

**This is a preview release of TubeSync, it may contain the bugs but should be usable**

|

||||

|

||||

TubeSync is a PVR (personal video recorder) for YouTube. Or, like Sonarr but for

|

||||

YouTube (with a built-in download client). It is designed to synchronize channels and

|

||||

|

|

@ -9,43 +9,36 @@ downloaded.

|

|||

|

||||

If you want to watch YouTube videos in particular quality or settings from your local

|

||||

media server, then TubeSync is for you. Internally, TubeSync is a web interface wrapper

|

||||

on `yt-dlp` and `ffmpeg` with a task scheduler.

|

||||

on `youtube-dl` and `ffmpeg` with a task scheduler.

|

||||

|

||||

There are several other web interfaces to YouTube and `yt-dlp` all with varying

|

||||

features and implementations. TubeSync's largest difference is full PVR experience of

|

||||

There are several other web interfaces to YouTube and `youtube-dl` all with varying

|

||||

features and implemenations. TubeSync's largest difference is full PVR experience of

|

||||

updating media servers and better selection of media formats. Additionally, to be as

|

||||

hands-free as possible, TubeSync has gradual retrying of failures with back-off timers

|

||||

so media which fails to download will be retried for an extended period making it,

|

||||

hopefully, quite reliable.

|

||||

|

||||

|

||||

# Latest container image

|

||||

|

||||

```yaml

|

||||

ghcr.io/meeb/tubesync:latest

|

||||

```

|

||||

|

||||

# Screenshots

|

||||

|

||||

### Dashboard

|

||||

|

||||

|

||||

|

||||

|

||||

### Sources overview

|

||||

|

||||

|

||||

|

||||

|

||||

### Source details

|

||||

|

||||

|

||||

|

||||

|

||||

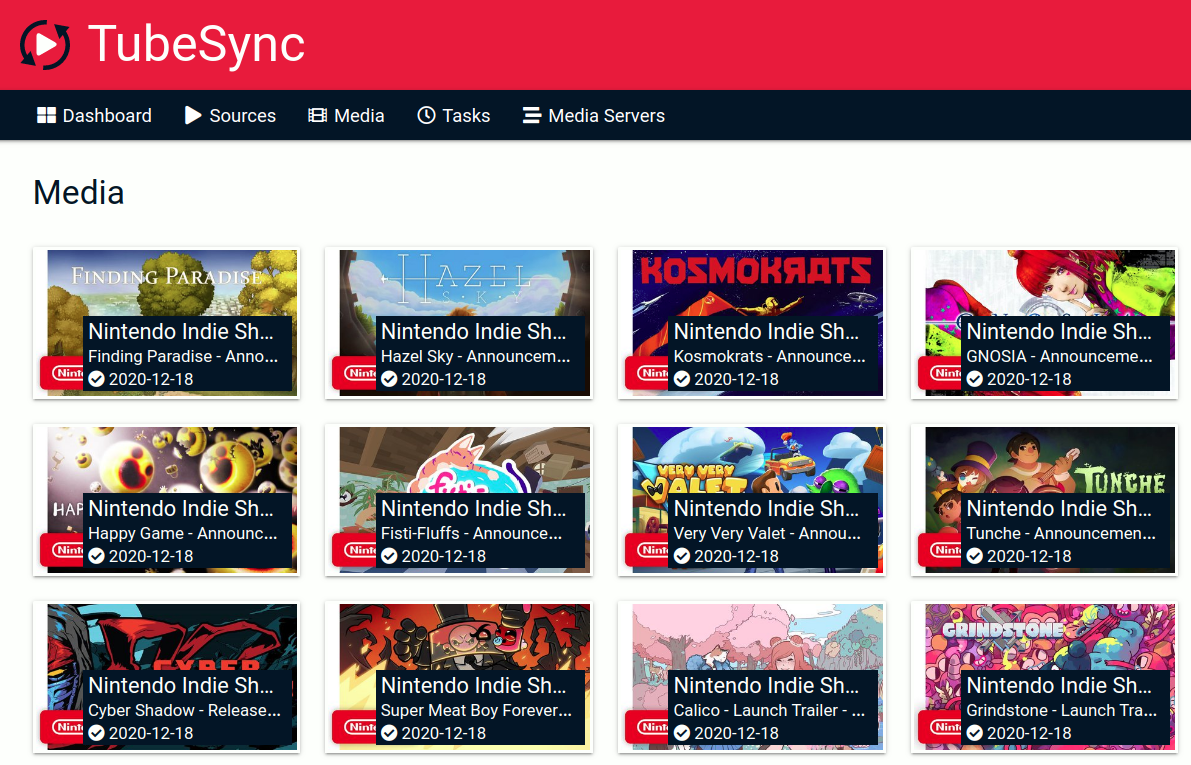

### Media overview

|

||||

|

||||

|

||||

|

||||

|

||||

### Media details

|

||||

|

||||

|

||||

|

||||

|

||||

|

||||

# Requirements

|

||||

|

|

@ -69,12 +62,11 @@ currently just Plex, to complete the PVR experience.

|

|||

# Installation

|

||||

|

||||

TubeSync is designed to be run in a container, such as via Docker or Podman. It also

|

||||

works in a Docker Compose stack. `amd64` (most desktop PCs and servers) and `arm64`

|

||||

(modern ARM computers, such as the Rasperry Pi 3 or later) are supported.

|

||||

works in a Docker Compose stack. Only `amd64` is initially supported.

|

||||

|

||||

Example (with Docker on *nix):

|

||||

|

||||

First find the user ID and group ID you want to run TubeSync as, if you're not

|

||||

First find your the user ID and group ID you want to run TubeSync as, if you're not

|

||||

sure what this is it's probably your current user ID and group ID:

|

||||

|

||||

```bash

|

||||

|

|

@ -99,8 +91,8 @@ $ mkdir /some/directory/tubesync-downloads

|

|||

Finally, download and run the container:

|

||||

|

||||

```bash

|

||||

# Pull image

|

||||

$ docker pull ghcr.io/meeb/tubesync:latest

|

||||

# Pull a versioned image

|

||||

$ docker pull ghcr.io/meeb/tubesync:v0.3

|

||||

# Start the container using your user ID and group ID

|

||||

$ docker run \

|

||||

-d \

|

||||

|

|

@ -111,21 +103,19 @@ $ docker run \

|

|||

-v /some/directory/tubesync-config:/config \

|

||||

-v /some/directory/tubesync-downloads:/downloads \

|

||||

-p 4848:4848 \

|

||||

ghcr.io/meeb/tubesync:latest

|

||||

ghcr.io/meeb/tubesync:v0.3

|

||||

```

|

||||

|

||||

Once running, open `http://localhost:4848` in your browser and you should see the

|

||||

TubeSync dashboard. If you do, you can proceed to adding some sources (YouTube channels

|

||||

and playlists). If not, check `docker logs tubesync` to see what errors might be

|

||||

occurring, typical ones are file permission issues.

|

||||

occuring, typical ones are file permission issues.

|

||||

|

||||

Alternatively, for Docker Compose, you can use something like:

|

||||

|

||||

```yml

|

||||

version: '3.7'

|

||||

services:

|

||||

```yaml

|

||||

tubesync:

|

||||

image: ghcr.io/meeb/tubesync:latest

|

||||

image: ghcr.io/meeb/tubesync:v0.3

|

||||

container_name: tubesync

|

||||

restart: unless-stopped

|

||||

ports:

|

||||

|

|

@ -134,52 +124,17 @@ services:

|

|||

- /some/directory/tubesync-config:/config

|

||||

- /some/directory/tubesync-downloads:/downloads

|

||||

environment:

|

||||

- TZ=Europe/London

|

||||

- TZ=$TIMEZONE

|

||||

- PUID=1000

|

||||

- PGID=1000

|

||||

```

|

||||

|

||||

|

||||

## Optional authentication

|

||||

|

||||

Available in `v1.0` (or `:latest`)and later. If you want to enable a basic username and

|

||||

password to be required to access the TubeSync dashboard you can set them with the

|

||||

following environment variables:

|

||||

|

||||

```bash

|

||||

HTTP_USER

|

||||

HTTP_PASS

|

||||

```

|

||||

|

||||

For example, in the `docker run ...` line add in:

|

||||

|

||||

```bash

|

||||

...

|

||||

-e HTTP_USER=some-username \

|

||||

-e HTTP_PASS=some-secure-password \

|

||||

...

|

||||

```

|

||||

|

||||

Or in your Docker Compose file you would add in:

|

||||

|

||||

```yaml

|

||||

...

|

||||

environment:

|

||||

- HTTP_USER=some-username

|

||||

- HTTP_PASS=some-secure-password

|

||||

...

|

||||

```

|

||||

|

||||

When BOTH `HTTP_USER` and `HTTP_PASS` are set then basic HTTP authentication will be

|

||||

enabled.

|

||||

|

||||

|

||||

# Updating

|

||||

|

||||

To update, you can just pull a new version of the container image as they are released.

|

||||

|

||||

```bash

|

||||

$ docker pull ghcr.io/meeb/tubesync:v[number]

|

||||

$ docker pull pull ghcr.io/meeb/tubesync:v[number]

|

||||

```

|

||||

|

||||

Back-end updates such as database migrations should be automatic.

|

||||

|

|

@ -231,27 +186,14 @@ $ docker logs --follow tubesync

|

|||

```

|

||||

|

||||

|

||||

# Advanced usage guides

|

||||

|

||||

Once you're happy using TubeSync there are some advanced usage guides for more complex

|

||||

and less common features:

|

||||

|

||||

* [Import existing media into TubeSync](https://github.com/meeb/tubesync/blob/main/docs/import-existing-media.md)

|

||||

* [Sync or create missing metadata files](https://github.com/meeb/tubesync/blob/main/docs/create-missing-metadata.md)

|

||||

* [Reset tasks from the command line](https://github.com/meeb/tubesync/blob/main/docs/reset-tasks.md)

|

||||

* [Using PostgreSQL, MySQL or MariaDB as database backends](https://github.com/meeb/tubesync/blob/main/docs/other-database-backends.md)

|

||||

* [Using cookies](https://github.com/meeb/tubesync/blob/main/docs/using-cookies.md)

|

||||

* [Reset metadata](https://github.com/meeb/tubesync/blob/main/docs/reset-metadata.md)

|

||||

|

||||

|

||||

# Warnings

|

||||

|

||||

### 1. Index frequency

|

||||

|

||||

It's a good idea to add sources with as long of an index frequency as possible. This is

|

||||

the duration between indexes of the source. An index is when TubeSync checks to see

|

||||

It's a good idea to add sources with as low an index frequency as possible. This is the

|

||||

duration between indexes of the source. An index is when TubeSync checks to see

|

||||

what videos available on a channel or playlist to find new media. Try and keep this as

|

||||

long as possible, up to 24 hours.

|

||||

long as possible, 24 hours if possible.

|

||||

|

||||

|

||||

### 2. Indexing massive channels

|

||||

|

|

@ -261,14 +203,6 @@ every hour" or similar short interval it's entirely possible your TubeSync insta

|

|||

spend its entire time just indexing the massive channel over and over again without

|

||||

downloading any media. Check your tasks for the status of your TubeSync install.

|

||||

|

||||

If you add a significant amount of "work" due to adding many large channels you may

|

||||

need to increase the number of background workers by setting the `TUBESYNC_WORKERS`

|

||||

environment variable. Try around ~4 at most, although the absolute maximum allowed is 8.

|

||||

|

||||

**Be nice.** it's likely entirely possible your IP address could get throttled by the

|

||||

source if you try and crawl extremely large amounts very quickly. **Try and be polite

|

||||

with the smallest amount of indexing and concurrent downloads possible for your needs.**

|

||||

|

||||

|

||||

# FAQ

|

||||

|

||||

|

|

@ -282,8 +216,8 @@ automatically.

|

|||

|

||||

### Does TubeSync support any other video platforms?

|

||||

|

||||

At the moment, no. This is a pre-release. The library TubeSync uses that does most

|

||||

of the downloading work, `yt-dlp`, supports many hundreds of video sources so it's

|

||||

At the moment, no. This is a first release. The library TubeSync uses that does most

|

||||

of the downloading work, `youtube-dl`, supports many hundreds of video sources so it's

|

||||

likely more will be added to TubeSync if there is demand for it.

|

||||

|

||||

### Is there a progress bar?

|

||||

|

|

@ -295,27 +229,27 @@ your install is doing check the container logs.

|

|||

|

||||

### Are there alerts when a download is complete?

|

||||

|

||||

No, this feature is best served by existing services such as the excellent

|

||||

[Tautulli](https://tautulli.com/) which can monitor your Plex server and send alerts

|

||||

No, this feature is best served by existing services such as the execelent

|

||||

[tautulli](https://tautulli.com/) which can monitor your Plex server and send alerts

|

||||

that way.

|

||||

|

||||

### There are errors in my "tasks" tab!

|

||||

### There's errors in my "tasks" tab!

|

||||

|

||||

You only really need to worry about these if there is a permanent failure. Some errors

|

||||

are temporary and will be retried for you automatically, such as a download got

|

||||

interrupted and will be tried again later. Sources with permanent errors (such as no

|

||||

are temproary and will be retried for you automatically, such as a download got

|

||||

interrupted and will be tried again later. Sources with permanet errors (such as no

|

||||

media available because you got a channel name wrong) will be shown as errors on the

|

||||

"sources" tab.

|

||||

|

||||

### What is TubeSync written in?

|

||||

|

||||

Python3 using Django, embedding yt-dlp. It's pretty much glue between other much

|

||||

Python3 using Django, embedding youtube-dl. It's pretty much glue between other much

|

||||

larger libraries.

|

||||

|

||||

Notable libraries and software used:

|

||||

|

||||

* [Django](https://www.djangoproject.com/)

|

||||

* [yt-dlp](https://github.com/yt-dlp/yt-dlp)

|

||||

* [youtube-dl](https://yt-dl.org/)

|

||||

* [ffmpeg](https://ffmpeg.org/)

|

||||

* [Django Background Tasks](https://github.com/arteria/django-background-tasks/)

|

||||

* [django-sass](https://github.com/coderedcorp/django-sass/)

|

||||

|

|

@ -325,7 +259,7 @@ See the [Pipefile](https://github.com/meeb/tubesync/blob/main/Pipfile) for a ful

|

|||

|

||||

### Can I get access to the full Django admin?

|

||||

|

||||

Yes, although pretty much all operations are available through the front-end interface

|

||||

Yes, although pretty much all operations are available through the front end interface

|

||||

and you can probably break things by playing in the admin. If you still want to access

|

||||

it you can run:

|

||||

|

||||

|

|

@ -338,9 +272,7 @@ can log in at http://localhost:4848/admin

|

|||

|

||||

### Are there user accounts or multi-user support?

|

||||

|

||||

There is support for basic HTTP authentication by setting the `HTTP_USER` and

|

||||

`HTTP_PASS` environment variables. There is not support for multi-user or user

|

||||

management.

|

||||

No not at the moment. This could be added later if there is demand for it.

|

||||

|

||||

### Does TubeSync support HTTPS?

|

||||

|

||||

|

|

@ -351,37 +283,27 @@ etc.). Configuration of this is beyond the scope of this README.

|

|||

|

||||

Just `amd64` for the moment. Others may be made available if there is demand.

|

||||

|

||||

### The pipenv install fails with "Locking failed"!

|

||||

|

||||

Make sure that you have `mysql_config` or `mariadb_config` available, as required by the python module `mysqlclient`. On Debian-based systems this is usually found in the package `libmysqlclient-dev`

|

||||

|

||||

|

||||

# Advanced configuration

|

||||

|

||||

There are a number of other environment variables you can set. These are, mostly,

|

||||

**NOT** required to be set in the default container installation, they are really only

|

||||

**NOT** required to be set in the default container installation, they are mostly

|

||||

useful if you are manually installing TubeSync in some other environment. These are:

|

||||

|

||||

| Name | What | Example |

|

||||

| --------------------------- | ------------------------------------------------------------ | ------------------------------------ |

|

||||

| DJANGO_SECRET_KEY | Django's SECRET_KEY | YJySXnQLB7UVZw2dXKDWxI5lEZaImK6l |

|

||||

| DJANGO_URL_PREFIX | Run TubeSync in a sub-URL on the web server | /somepath/ |

|

||||

| TUBESYNC_DEBUG | Enable debugging | True |

|

||||

| TUBESYNC_WORKERS | Number of background workers, default is 2, max allowed is 8 | 2 |

|

||||

| TUBESYNC_HOSTS | Django's ALLOWED_HOSTS, defaults to `*` | tubesync.example.com,otherhost.com |

|

||||

| TUBESYNC_RESET_DOWNLOAD_DIR | Toggle resetting `/downloads` permissions, defaults to True | True

|

||||

| GUNICORN_WORKERS | Number of gunicorn workers to spawn | 3 |

|

||||

| LISTEN_HOST | IP address for gunicorn to listen on | 127.0.0.1 |

|

||||

| LISTEN_PORT | Port number for gunicorn to listen on | 8080 |

|

||||

| HTTP_USER | Sets the username for HTTP basic authentication | some-username |

|

||||

| HTTP_PASS | Sets the password for HTTP basic authentication | some-secure-password |

|

||||

| DATABASE_CONNECTION | Optional external database connection details | mysql://user:pass@host:port/database |

|

||||

| Name | What | Example |

|

||||

| ----------------- | ------------------------------------- | ---------------------------------- |

|

||||

| DJANGO_SECRET_KEY | Django secret key | YJySXnQLB7UVZw2dXKDWxI5lEZaImK6l |

|

||||

| TUBESYNC_DEBUG | Enable debugging | True |

|

||||

| TUBESYNC_HOSTS | Django's ALLOWED_HOSTS | tubesync.example.com,otherhost.com |

|

||||

| GUNICORN_WORKERS | Number of gunicorn workers to spawn | 3 |

|

||||

| LISTEN_HOST | IP address for gunicorn to listen on | 127.0.0.1 |

|

||||

| LISTEN_PORT | Port number for gunicorn to listen on | 8080 |

|

||||

|

||||

|

||||

# Manual, non-containerised, installation

|

||||

|

||||

As a relatively normal Django app you can run TubeSync without the container. Beyond

|

||||

following this rough guide, you are on your own and should be knowledgeable about

|

||||

the following rough guide you are on your own and should be knowledgeable about

|

||||

installing and running WSGI-based Python web applications before attempting this.

|

||||

|

||||

1. Clone or download this repo

|

||||

|

|

@ -392,7 +314,7 @@ installing and running WSGI-based Python web applications before attempting this

|

|||

`tubesync/tubesync/local_settings.py` and edit it as appropriate

|

||||

5. Run migrations with `./manage.py migrate`

|

||||

6. Collect static files with `./manage.py collectstatic`

|

||||

6. Set up your prefered WSGI server, such as `gunicorn` pointing it to the application

|

||||

6. Set up your prefered WSGI server, such as `gunicorn` poiting it to the application

|

||||

in `tubesync/tubesync/wsgi.py`

|

||||

7. Set up your proxy server such as `nginx` and forward it to the WSGI server

|

||||

8. Check the web interface is working

|

||||

|

|

@ -404,7 +326,7 @@ installing and running WSGI-based Python web applications before attempting this

|

|||

|

||||

# Tests

|

||||

|

||||

There is a moderately comprehensive test suite focusing on the custom media format

|

||||

There is a moderately comprehensive test suite focussing on the custom media format

|

||||

matching logic and that the front-end interface works. You can run it via Django:

|

||||

|

||||

```bash

|

||||

|

|

|

|||

|

|

@ -0,0 +1,27 @@

|

|||

#!/usr/bin/with-contenv bash

|

||||

|

||||

# Change runtime user UID and GID

|

||||

PUID=${PUID:-911}

|

||||

PGID=${PGID:-911}

|

||||

groupmod -o -g "$PGID" app

|

||||

usermod -o -u "$PUID" app

|

||||

|

||||

# Reset permissions

|

||||

chown -R app:app /run/app && \

|

||||

chmod -R 0700 /run/app && \

|

||||

chown -R app:app /config && \

|

||||

chmod -R 0755 /config && \

|

||||

chown -R app:app /downloads && \

|

||||

chmod -R 0755 /downloads && \

|

||||

chown -R root:app /app && \

|

||||

chmod -R 0750 /app && \

|

||||

chown -R app:app /app/common/static && \

|

||||

chmod -R 0750 /app/common/static && \

|

||||

chown -R app:app /app/static && \

|

||||

chmod -R 0750 /app/static && \

|

||||

find /app -type f -exec chmod 640 {} \; && \

|

||||

chmod +x /app/healthcheck.py

|

||||

|

||||

# Run migrations

|

||||

exec s6-setuidgid app \

|

||||

/usr/bin/python3 /app/manage.py migrate

|

||||

|

|

@ -79,11 +79,6 @@ http {

|

|||

proxy_connect_timeout 10;

|

||||

}

|

||||

|

||||

# File dwnload and streaming

|

||||

location /media-data/ {

|

||||

internal;

|

||||

alias /downloads/;

|

||||

}

|

||||

}

|

||||

|

||||

}

|

||||

|

|

|

|||

|

|

@ -1,46 +0,0 @@

|

|||

bind 127.0.0.1

|

||||

protected-mode yes

|

||||

port 6379

|

||||

tcp-backlog 511

|

||||

timeout 0

|

||||

tcp-keepalive 300

|

||||

daemonize no

|

||||

supervised no

|

||||

loglevel notice

|

||||

logfile ""

|

||||

databases 1

|

||||

always-show-logo no

|

||||

save ""

|

||||

dir /var/lib/redis

|

||||

maxmemory 64mb

|

||||

maxmemory-policy noeviction

|

||||

lazyfree-lazy-eviction no

|

||||

lazyfree-lazy-expire no

|

||||

lazyfree-lazy-server-del no

|

||||

replica-lazy-flush no

|

||||

lazyfree-lazy-user-del no

|

||||

oom-score-adj no

|

||||

oom-score-adj-values 0 200 800

|

||||

appendonly no

|

||||

appendfsync no

|

||||

lua-time-limit 5000

|

||||

slowlog-log-slower-than 10000

|

||||

slowlog-max-len 128

|

||||

latency-monitor-threshold 0

|

||||

notify-keyspace-events ""

|

||||

hash-max-ziplist-entries 512

|

||||

hash-max-ziplist-value 64

|

||||

list-max-ziplist-size -2

|

||||

list-compress-depth 0

|

||||

set-max-intset-entries 512

|

||||

zset-max-ziplist-entries 128

|

||||

zset-max-ziplist-value 64

|

||||

hll-sparse-max-bytes 3000

|

||||

stream-node-max-bytes 4096

|

||||

stream-node-max-entries 100

|

||||

activerehashing yes

|

||||

client-output-buffer-limit normal 0 0 0

|

||||

client-output-buffer-limit replica 256mb 64mb 60

|

||||

client-output-buffer-limit pubsub 32mb 8mb 60

|

||||

hz 10

|

||||

dynamic-hz yes

|

||||

|

|

@ -1 +0,0 @@

|

|||

gunicorn

|

||||

|

|

@ -1,25 +0,0 @@

|

|||

#!/usr/bin/with-contenv bash

|

||||

|

||||

UMASK_SET=${UMASK_SET:-022}

|

||||

umask "$UMASK_SET"

|

||||

|

||||

cd /app || exit

|

||||

|

||||

PIDFILE=/run/app/celery-beat.pid

|

||||

SCHEDULE=/tmp/tubesync-celerybeat-schedule

|

||||

|

||||

if [ -f "${PIDFILE}" ]

|

||||

then

|

||||

PID=$(cat $PIDFILE)

|

||||

echo "Unexpected PID file exists at ${PIDFILE} with PID: ${PID}"

|

||||

if kill -0 $PID

|

||||

then

|

||||

echo "Killing old gunicorn process with PID: ${PID}"

|

||||

kill -9 $PID

|

||||

fi

|

||||

echo "Removing stale PID file: ${PIDFILE}"

|

||||

rm ${PIDFILE}

|

||||

fi

|

||||

|

||||

#exec s6-setuidgid app \

|

||||

# /usr/local/bin/celery --workdir /app -A tubesync beat --pidfile ${PIDFILE} -s ${SCHEDULE}

|

||||

|

|

@ -1 +0,0 @@

|

|||

longrun

|

||||

|

|

@ -1 +0,0 @@

|

|||

gunicorn

|

||||

|

|

@ -1,24 +0,0 @@

|

|||

#!/usr/bin/with-contenv bash

|

||||

|

||||

UMASK_SET=${UMASK_SET:-022}

|

||||

umask "$UMASK_SET"

|

||||

|

||||

cd /app || exit

|

||||

|

||||

PIDFILE=/run/app/celery-worker.pid

|

||||

|

||||

if [ -f "${PIDFILE}" ]

|

||||

then

|

||||

PID=$(cat $PIDFILE)

|

||||

echo "Unexpected PID file exists at ${PIDFILE} with PID: ${PID}"

|

||||

if kill -0 $PID

|

||||

then

|

||||

echo "Killing old gunicorn process with PID: ${PID}"

|

||||

kill -9 $PID

|

||||

fi

|

||||

echo "Removing stale PID file: ${PIDFILE}"

|

||||

rm ${PIDFILE}

|

||||

fi

|

||||

|

||||

#exec s6-setuidgid app \

|

||||

# /usr/local/bin/celery --workdir /app -A tubesync worker --pidfile ${PIDFILE} -l INFO

|

||||

|

|

@ -1 +0,0 @@

|

|||

longrun

|

||||

|

|

@ -1 +0,0 @@

|

|||

tubesync-init

|

||||

|

|

@ -1,24 +0,0 @@

|

|||

#!/command/with-contenv bash

|

||||

|

||||

UMASK_SET=${UMASK_SET:-022}

|

||||

umask "$UMASK_SET"

|

||||

|

||||

cd /app || exit

|

||||

|

||||

PIDFILE=/run/app/gunicorn.pid

|

||||

|

||||

if [ -f "${PIDFILE}" ]

|

||||

then

|

||||

PID=$(cat $PIDFILE)

|

||||

echo "Unexpected PID file exists at ${PIDFILE} with PID: ${PID}"

|

||||

if kill -0 $PID

|

||||

then

|

||||

echo "Killing old gunicorn process with PID: ${PID}"

|

||||

kill -9 $PID

|

||||

fi

|

||||

echo "Removing stale PID file: ${PIDFILE}"

|

||||

rm ${PIDFILE}

|

||||

fi

|

||||

|

||||

exec s6-setuidgid app \

|

||||

/usr/local/bin/gunicorn -c /app/tubesync/gunicorn.py --capture-output tubesync.wsgi:application

|

||||

|

|

@ -1 +0,0 @@

|

|||

longrun

|

||||

|

|

@ -1 +0,0 @@

|

|||

gunicorn

|

||||

|

|

@ -1,5 +0,0 @@

|

|||

#!/command/with-contenv bash

|

||||

|

||||

cd /

|

||||

|

||||

/usr/sbin/nginx

|

||||

|

|

@ -1 +0,0 @@

|

|||

longrun

|

||||

|

|

@ -1,4 +0,0 @@

|

|||

#!/command/with-contenv bash

|

||||

|

||||

exec s6-setuidgid redis \

|

||||

/usr/bin/redis-server /etc/redis/redis.conf

|

||||

|

|

@ -1 +0,0 @@

|

|||

longrun

|

||||

|

|

@ -1,34 +0,0 @@

|

|||

#!/command/with-contenv bash

|

||||

|

||||

# Change runtime user UID and GID

|

||||

PUID="${PUID:-911}"

|

||||

PUID="${PUID:-911}"

|

||||

groupmod -o -g "$PGID" app

|

||||

usermod -o -u "$PUID" app

|

||||

|

||||

# Reset permissions

|

||||

chown -R app:app /run/app

|

||||

chmod -R 0700 /run/app

|

||||

chown -R app:app /config

|

||||

chmod -R 0755 /config

|

||||

chown -R root:app /app

|

||||

chmod -R 0750 /app

|

||||

chown -R app:app /app/common/static

|

||||

chmod -R 0750 /app/common/static

|

||||

chown -R app:app /app/static

|

||||

chmod -R 0750 /app/static

|

||||

find /app -type f ! -iname healthcheck.py -exec chmod 640 {} \;

|

||||

chmod 0755 /app/healthcheck.py

|

||||

|

||||

# Optionally reset the download dir permissions

|

||||

TUBESYNC_RESET_DOWNLOAD_DIR="${TUBESYNC_RESET_DOWNLOAD_DIR:-True}"

|

||||

if [ "$TUBESYNC_RESET_DOWNLOAD_DIR" == "True" ]

|

||||

then

|

||||

echo "TUBESYNC_RESET_DOWNLOAD_DIR=True, Resetting /downloads directory permissions"

|

||||

chown -R app:app /downloads

|

||||

chmod -R 0755 /downloads

|

||||

fi

|

||||

|

||||

# Run migrations

|

||||

exec s6-setuidgid app \

|

||||

/usr/bin/python3 /app/manage.py migrate

|

||||

|

|

@ -1 +0,0 @@

|

|||

oneshot

|

||||

|

|

@ -1,3 +0,0 @@

|

|||

#!/command/execlineb -P

|

||||

|

||||

/etc/s6-overlay/s6-rc.d/tubesync-init/run

|

||||

|

|

@ -1 +0,0 @@

|

|||

gunicorn

|

||||

|

|

@ -1 +0,0 @@

|

|||

longrun

|

||||

|

|

@ -0,0 +1,9 @@

|

|||

#!/usr/bin/with-contenv bash

|

||||

|

||||

UMASK_SET=${UMASK_SET:-022}

|

||||

umask "$UMASK_SET"

|

||||

|

||||

cd /app || exit

|

||||

|

||||

exec s6-setuidgid app \

|

||||

/usr/local/bin/gunicorn -c /app/tubesync/gunicorn.py --capture-output tubesync.wsgi:application

|

||||

|

|

@ -0,0 +1,5 @@

|

|||

#!/usr/bin/with-contenv bash

|

||||

|

||||

cd /

|

||||

|

||||

/usr/sbin/nginx

|

||||

|

|

@ -1,4 +1,4 @@

|

|||

#!/command/with-contenv bash

|

||||

#!/usr/bin/with-contenv bash

|

||||

|

||||

exec s6-setuidgid app \

|

||||

/usr/bin/python3 /app/manage.py process_tasks

|

||||

|

|

@ -1,37 +0,0 @@

|

|||

# TubeSync

|

||||

|

||||

## Advanced usage guide - creating missing metadata

|

||||

|

||||

This is a new feature in v0.9 of TubeSync and later. It allows you to create or

|

||||

re-create missing metadata in your TubeSync download directories for missing `nfo`

|

||||

files and thumbnails.

|

||||

|

||||

If you add a source with "write NFO files" or "copy thumbnails" disabled, download

|

||||

some media and then update the source to write NFO files or copy thumbnails then

|

||||

TubeSync will not automatically retroactively attempt to copy or create your missing

|

||||

metadata files. You can use a special one-off command to manually write missing

|

||||

metadata files to the correct locations.

|

||||

|

||||

## Requirements

|

||||

|

||||

You have added a source without metadata writing enabled, downloaded some media, then

|

||||

updated the source to enable metadata writing.

|

||||

|

||||

## Steps

|

||||

|

||||

### 1. Run the batch metadata sync command

|

||||

|

||||

Execute the following Django command:

|

||||

|

||||

`./manage.py sync-missing-metadata`

|

||||

|

||||

When deploying TubeSync inside a container, you can execute this with:

|

||||

|

||||

`docker exec -ti tubesync python3 /app/manage.py sync-missing-metadata`

|

||||

|

||||

This command will log what its doing to the terminal when you run it.

|

||||

|

||||

Internally, this command loops over all your sources which have been saved with

|

||||

"write NFO files" or "copy thumbnails" enabled. Then, loops over all media saved to

|

||||

that source and confirms that the appropriate thumbnail files have been copied over and

|

||||

the NFO file has been written if enabled.

|

||||

|

Before Width: | Height: | Size: 188 KiB |

|

After Width: | Height: | Size: 170 KiB |

|

|

@ -1,81 +0,0 @@

|

|||

# TubeSync

|

||||

|

||||

## Advanced usage guide - importing existing media

|

||||

|

||||

This is a new feature in v0.9 of TubeSync and later. It allows you to mark existing

|

||||

downloaded media as "downloaded" in TubeSync. You can use this feature if, for example,

|

||||

you already have an extensive catalogue of downloaded media which you want to mark

|

||||

as downloaded into TubeSync so TubeSync doesn't re-download media you already have.

|

||||

|

||||

## Requirements

|

||||

|

||||

Your existing downloaded media MUST contain the unique ID. For YouTube videos, this is

|

||||

means the YouTube video ID MUST be in the filename.

|

||||

|

||||

Supported extensions to be imported are .m4a, .ogg, .mkv, .mp3, .mp4 and .avi. Your

|

||||

media you want to import must end in one of these file extensions.

|

||||

|

||||

## Caveats

|

||||

|

||||

As TubeSync does not probe media and your existing media may be re-encoded or in

|

||||

different formats to what is available in the current media metadata there is no way

|

||||

for TubeSync to know what codecs, resolution, bitrate etc. your imported media is in.

|

||||

Any manually imported existing local media will display blank boxes for this

|

||||

information on the TubeSync interface as it's unavailable.

|

||||

|

||||

## Steps

|

||||

|

||||

### 1. Add your source to TubeSync

|

||||

|

||||

Add your source to TubeSync, such as a YouTube channel. **Make sure you untick the

|

||||

"download media" checkbox.**

|

||||

|

||||

This will allow TubeSync to index all the available media on your source, but won't

|

||||

start downloading any media.

|

||||

|

||||

### 2. Wait

|

||||

|

||||

Wait for all the media on your source to be indexed. This may take some time.

|

||||

|

||||

### 3. Move your existing media into TubeSync

|

||||

|

||||

You now need to move your existing media into TubeSync. You need to move the media

|

||||

files into the correct download directories created by TubeSync. For example, if you

|

||||

have downloaded videos for a YouTube channel "TestChannel", you would have added this

|

||||

as a source called TestChannel and in a directory called test-channel in Tubesync. It

|

||||

would have a download directory created on disk at: